- Systems/AI engineer specializing in C++ kernels and LLM inference on NPU/GPU.

- At Microsoft (AI Frameworks, Vancouver): work on MAIA kernels and inference pipelines; build high-performance AI software in C/C++/Python; collaborate with HW/ML teams to optimize large-scale training/inference on Microsoft accelerators.

- Previously at Huawei: co-developed

sgmv/bgmvLoRA operators for vLLM (Ascend) and led database-engine performance work. - Core skills: profiling-driven SIMD, tiling, memory-layout design, throughput tuning across kernels/runtime/I/O.

- MASc, University of Waterloo (persistent memory).

- Open source: Ascend-vLLM LoRA kernels

- Tutoring: Algorithms, Data Structures, C++, Python.

Nov 2024 - Present | Microsoft — AI Frameworks | Vancouver, Canada

- Optimize C++ LLM kernels and Python bindings for Microsoft AI accelerators (MAIA); improved inference throughput/latency in internal benchmarks.

- Implemented MAIA-specific kernels, delivering ∼40% lower latency versus prior Triton implementations across targeted ops.

- Co-designed microbenchmarks, productionized changes with CI, and profiled end-to-end paths (kernels, runtime, I/O); reduced stalls via SIMD, tiling, cache-friendly layouts, and double buffering.

Sep 2023 - Nov 2024 | Huawei Technologies Canada | Burnaby, Canada

LLM Inference (May 2024 - Nov 2024):

- Co-developed Ascend NPU operators (

sgmv,bgmv) to accelerate LoRA in vLLM; validated on multi-card nodes; subsequently open-sourced by the team under the vLLM Ascend repo. - Achieved 3-5x speedups over a BMM baseline across diverse LoRA distribution scenarios.

- Built latency/throughput regression tests and CI checks; profiled kernels and runtime to remove bottlenecks.

Database Systems (Sep 2023 - May 2024):

- Led R&D for an in-memory OLAP DB; designed and implemented asynchronous scan operators.

- Increased CPU utilization by 20-40% on TPC-H queries via vectorized execution, cache-aware algorithms, and I/O overlap.

Open source: the Ascend kernels were released at

vllm-project/vllm-ascend

, documenting how the NPU-specific sgmv/bgmv paths integrate with vLLM to speed up LoRA serving on Ascend hardware.

Sep 2021 - Sep 2023 | University of Waterloo | Waterloo, Canada

Research experience on shared memory algorithms.

Designed and implemented snapshotting mechanisms for persistent memory-mapped files and detectable objects for persistent memory.

Jan 2022 - Apr 2023 | University of Waterloo | Waterloo, Canada

Helped in holding courses in Algorithm Design, Database Systems, Systems Programming, and Data Abstraction.

May 2022 - Aug 2022 | Huawei Technologies Canada Co., Ltd. | Waterloo, Canada

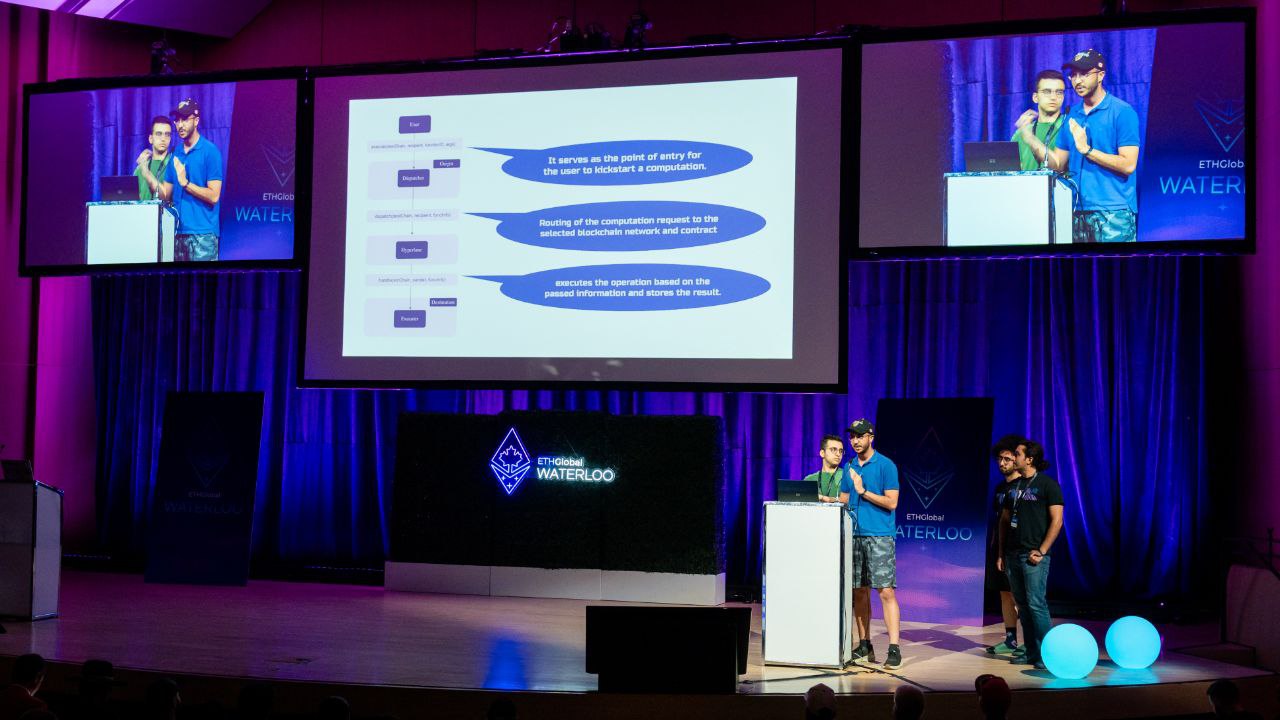

Worked on a collaborative project called Towards a High-velocity Permissioned Blockchain.

Oct 2018 - Jul 2021 | University of Tehran | Tehran, Iran

Assisted in more than 15 courses such as Advanced Programming and Operating Systems.

Oct 2019 - Apr 2021 | University of Tehran Science & Technology Park | Tehran, Iran

Analyzed social network behavior in the Ethereum network using graph analysis.

Jul 2019 - Sep 2019 | University of Tehran Science & Technology Park | Tehran, Iran

Participated in several Kaggle competitions and developed solutions using Scikit-Learn.

Sep 2021 - Sep 2023 | University of Waterloo | Waterloo, Canada

Grade: 94 / 100. Thesis on snapshotting mechanisms for persistent memory-mapped files. View Thesis

Sep 2016 - Jul 2021 | University of Tehran | Tehran, Iran

Grade: 90 / 100. Thesis on social network analysis within the Ethereum ecosystem.

Published in ApPLIED@PODC 2024. DOI: 10.1145/3663338.3665832

Investigates ways to enhance the reliability of persistent memory systems, focusing on snapshotting mechanisms and their role in system resilience. Introduces new snapshotting consistency models and mechanisms to improve performance and enhance system responsiveness. Provides experimental analysis demonstrating throughput and latency improvements.

Published in ApPLIED@PODC 2022. DOI: 10.1145/3524053.3542749

Explores the adaptation of multi-core algorithms to persistent memory, introducing the "Unified Detectable Sequential Specification" (UDSS), which simplifies interfaces and coding. Experiments conducted using Intel Optane memory demonstrate the performance implications of the implementation.

vLLM Ascend Kernels (sgmv, bgmv)

Co-developed NPU-specific operators to accelerate LoRA serving on Ascend hardware and integrated them into vLLM's execution path. Validated on multi-card nodes and later open-sourced by the team.

github.com/vllm-project/vllm-ascend/tree/main/csrc/kernels

- 3-5x speedups over BMM baselines for diverse LoRA distribution scenarios.

- Latency/throughput regression tests and CI checks to prevent performance regressions.

- Documented NPU-specific integration for kernel, runtime, and I/O paths.

Montage (UW) - Online Snapshotting

Research code and experiments for online snapshotting used in persistent-memory work at the University of Waterloo.

gitlab.uwaterloo.ca/mmoridi/montage-uw/-/tree/online-snapshotting

UDSS (Unified Detectable Sequential Specification)

Implementation supporting detectable objects / persistent-memory queue experiments with Prof. Golab's group.

git.uwaterloo.ca/wgolab/DSSQueue

These contributions align with my current work on C++ kernels, LLM inference, and profiling-driven optimization (SIMD, tiling, cache-friendly layouts) across kernels, runtime, and I/O.

Issued by University of Waterloo in Oct 2021

Issued by IEEE University of Tehran Student Branch in Jan 2020

Issued by IEEE University of Tehran Student Branch in Jan 2020

Issued by University of Tehran in Apr 2019

Issued by Huawei Technologies Canada in Oct 2024.

Was awarded the Best Team Award for outstanding contributions to LLM serving performance, by the President of the Canadian Research Center.

Issued by ETHGlobal in Jun 2023.

Project: Smarter Contract. View Project

Issued by Hyperlane in Jun 2023.

Project: Smarter Contract.

Issued by Waterloo Blockchain in May 2023.

Project: Glue. View Project

Issued by University of Waterloo in Sep 2021.